"Life is a series of events... We, human clusters, produce and consume messages"

~ Laptop and the Lady

Apache Kafka is a distributed streaming platform that can be used to build real-time data pipelines and streaming applications. At its core, Kafka is designed to handle large amounts of data in a scalable and fault-tolerant way. But, what does all of this mean, and how can we understand it using an everyday analogy?

Kafka: Events and Problems Solution...

Imagine that you are in charge of waste collection for a large city. You have a fleet of garbage trucks that drive around the city, collecting garbage from individual homes and businesses. Each home and business garbage has a limited amount of space, and once it is full, it needs to be collected by these garbage trucks and returned to the depot to be emptied. Your job is to ensure that the garbage is collected efficiently and effectively, without any of the homes and businesses garbage becoming overloaded or delayed.

Now, let's imagine that each home and business garbage represents a different data source in your organization. For example, home A garbage might represent your web server logs, another might represent your user database, and a third might represent your social media feeds. Each of these sources generates a constant stream of data that needs to be processed and analyzed in real-time.

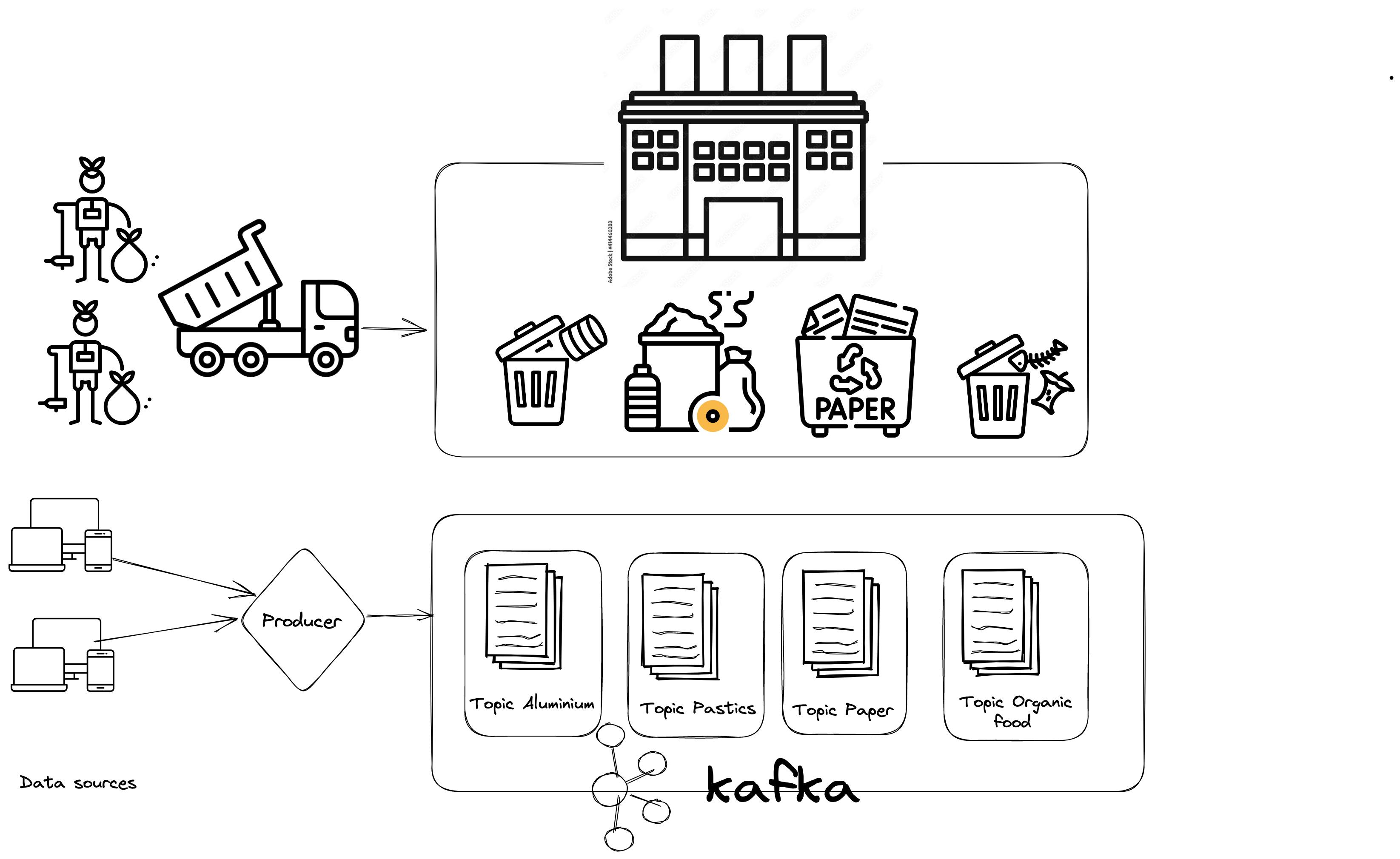

This is where Apache Kafka comes in. Kafka acts as a central hub for all of your data sources, allowing you to collect, process, and analyze data in real-time. In our waste collection analogy, Kafka would be the depot where all of the garbage trucks return to be emptied. Instead of having each truck drive directly to the landfill, they first stop at the depot to drop off their garbage. This allows you to consolidate and organize the garbage before it is sent to its final destination.

These garbage trucks represent producers in Kafka. Producers are applications that collect data from different sources (they can also be one of these data sources) and send them to Kafka. Just like multiple garbage trucks are moving around cities, there can be more than one Kafka producer.

The waste is a metaphor for events or messages in Kafka. This is data sourced and they can represent anything. It might be the number of button clicks, the amount of time a user has spent on a specific screen, or an order confirmation. Kafka is agnostic about the messages sent.

You cannot simply pile the waste up in one large heap once the garbage trucks have returned to the depot with it since that would be messy and difficult to handle (and we are trying to be a sustainable city!). So, you decide to sort the waste into different types, like aluminium, plastic, paper, and food waste.

Each type of waste is like a topic in Apache Kafka. Just like you have different types of waste, you can have different topics for different types of data.

Now imagine that you have a lot of plastic waste that needs to be collected. Instead of putting it all in one big pile, you decide to split it up into smaller groups based on the location where it was collected. Each group of plastic waste is like a partition in Apache Kafka.

So just like you have different types of waste (topics), you can also split up each type of waste into smaller groups based on where it came from (partitions). This makes it easier to manage and process the waste (data) in a more organized and efficient way.

In Kafka, data is organized into topics, which represent different streams of data. Each topic is divided into partitions, which represent individual pieces of data within that stream. For example, you might have a topic for your web server logs, with partitions representing each individual log entry. Each of these sources generates a constant stream of data that needs to be processed and analyzed in real-time.

To send or transport waste using the garbage truck to a type of waste at the depot is referred to as "producing" to a Kafka topic in the Kafka analogy. In other words, we say that a producer produces an event on a topic.

The Kafka producer is responsible for collecting data from various sources and producing it into a Kafka topic, similar to how a garbage truck collects waste from residential or commercial establishments and transports it to the appropriate waste transfer station or disposal site.

Consumers in Kafka are like waste processing plants that receive waste from different garbage trucks and process it further. In Kafka, consumers read data from one or more partitions of a topic and process it as needed. For example, a consumer might read data from a partition that contains web server logs and process it to generate analytics reports.

Imagine that our depot has four different waste processing plants that all process paper waste and the paper waste is divided into four different piles; these waste plants do not have to wait for each other before processing paper waste from any of these piles. Kafka does not require a priority queue or anything equivalent to allow any number of consumers to use it simultaneously.

An important concept in Apache Kafka is the concept of offsets. Offsets are like the address of a specific event or message within a partition. In Kafka, each message within a partition is assigned an offset, which represents its position within the partition. This allows consumers to keep track of which messages they have already processed and which ones they still need to process.

Kafka: fly like a bird Scalability and Security

In our waste collection analogy, offsets would be like the address of a specific piece of waste (event or message) within a specific group of waste (partition). This would allow the waste processing plants (consumers) to keep track of which waste (event or message) they have already processed and which ones they still need to process.

Replication, a feature of Kafka, ensures that data is not lost in the event of a failure. This is similar to having a backup garbage truck that can take over if the primary truck breaks down. In Kafka, each partition can have multiple replicas, which are copies of the same data stored on different servers. If one server fails, the data can still be retrieved from the other replicas.

For waste depots to function, they need proper infrastructure. We can refer to these infrastructures as Kafka brokers. Kafka clusters consist of multiple brokers, which are servers that store and manage data. Each broker can handle multiple partitions, and partitions can be spread across multiple brokers for redundancy and load balancing. This allows Kafka to handle large volumes of data in a scalable and fault-tolerant way.

Apache Kafka can handle data processing on a massive scale. Kafka is designed to handle large streams of data and can process millions of messages per second. This is critical for companies that need to process data in real-time, such as financial institutions, telecommunications or social media platforms.

Kafka also has a feature called "SSL/TLS encryption and authentication," which allows you to secure your data in transit. Similar to how waste needs to be transported securely and safely, Kafka allows you to secure your data as it is transferred between producers and brokers.

In addition to SSL/TLS encryption and authentication, Apache Kafka also includes other security features such as access control lists (ACLs) and authorization. This allows you to control who has access to your data and what actions they can perform on it.

ACLs in Kafka are like security guards that control who can enter a waste disposal facility and what they can do there. Similarly, in Kafka, ACLs control who can access your data and what actions they can perform on it.

Authorization in Kafka is like a set of rules that define what waste can and cannot be disposed of in certain areas. Similarly, in Kafka, authorization rules define what data can and cannot be accessed by certain users or groups.

Authentication in Kafka is like a security checkpoint that verifies the identity of garbage trucks entering a waste disposal facility. Similarly, in Kafka, authentication verifies the identity of producers and consumers accessing your data.

Kafka supports different types of authentication mechanisms, such as SSL client authentication, SASL (Simple Authentication and Security Layer), and OAuth. These mechanisms ensure that only authorized producers and consumers can access your data and that their identities are verified before they can do so.

To keep your data safe and secure, Kafka offers a full range of security capabilities, from authentication and authorisation to auditing and monitoring.

Produce "summary" to topic partition...

In this article, we discussed Kafka in general, along with its producer, topic, partition, offset, and consumer. We briefly discussed the Kafka broker, as well as authentication and authorization. We discovered that Apache Kafka places a priority on security and that its security measures work to protect your data and limit access to it to authorised users. We gained a better understanding of the fundamental Kafka features and how they may help organisations by using the analogy of waste disposal and collection.

Unusual subheadings? The Lady chose any subheading she could because she was at a loss for ideas and decided to ignore the Laptop's adamant objections. The Lady seems unconcerned with opinions in 2023. "Soon, I must start hunting for a new owner", the Laptop thought to itself.

The first instalment of a potential Kafka series (no guarantees!). Let me know what you think about this article!

This Lady will now rest until another time.